There’s something you should know about relational databases.

They were designed to store data efficiently, protecting the quality of the data written and stored to disk. I’ve written before about relational engines favoring data quality and integrity, and how relational databases were not designed for the reading of data.

Of course, if you are going through the trouble of writing the data into a relational database, it makes sense that you would want to retrieve the data at some point. Otherwise, why go through the exercise of storing the data inside the database?

The trouble with reading data from a relational database is due to the data not being stored in a format that is friendly for viewing, reading, or retrieving. That’s why we have data professionals, like me, to help you write queries that return the correct data, in the correct format, for you to analyze.

I’m here today to tell you we’ve been doing data wrong the whole damn time.

Let me show you what I mean.

Traditional Data Flow Patterns

Here’s what companies do, every day:

Step 1 – Identify useful data

Step 2 – Import that data into a database

Step 3 – Analyze the data

Step 4 – Export the data into dashboards

Step 5 – Profit (maybe)

The trouble with this process is Step 3, the analyzing of the data. Relational databases were not designed for analytical processing. Relational databases do not store data in a way that is readable, or friendly, for human analysis.

That’s not to say you can’t do analytics inside of a relational database. What I am saying is that it could be better for you not to spin the CPU cycles there, and instead do the analytics somewhere else.

For example, data warehouses help with data storage, retrieval, and analytics. But even a data warehouse can fall short when it comes to the use of unstructured data sources. As a result, we’ve spent decades building ETL processes to curate, collate, consolidate, and consume data.

And we’ve been doing it wrong.

Data Mining for the Data Expert

So, we understand that people find data, store it, and try to use it later. They are engaging in the process of data mining, hoping to find gold in the form of insights, leading to better business decisions.

But as I mentioned before, the data isn’t in a readable format. Let’s look at an example.

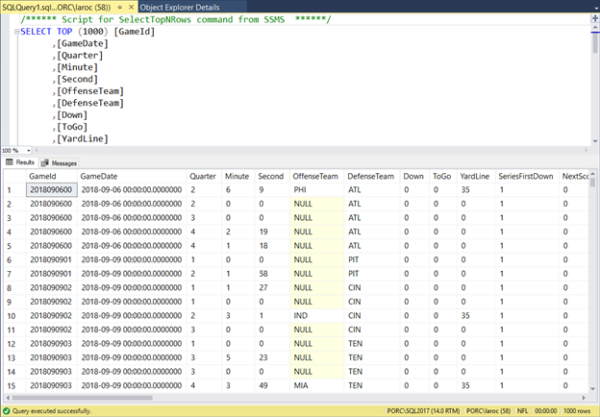

Following the common data flow pattern, I found some useful data at NFL Savant https://nflsavant.com/about.php, and imported the data into a SQL Server database:

It looks like any other table in a relational database. In this case, it is a table containing time series data pertaining to play by play records for the 2018 NFL season. Each row represents an entity (a play at a point in time of an NFL game), and the columns represent attributes of the play (down, distance, yards to go, etc.)

Nothing out of place here, this is how data is written to a relational database. In an orderly fashion. As a DBA, I love this type of orderly storage. It’s efficient, and efficient is good.

As a data analyst, I’m not a fan. At least, not yet. I have a bunch of data, but what I want are some answers. So, it’s up to me to ask some questions of the data, find some answers, and use that to help make better business decisions.

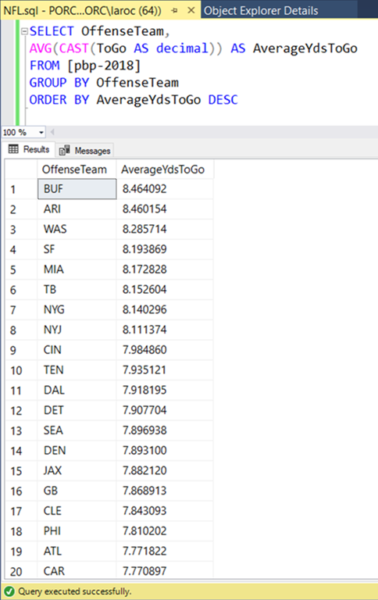

For this data, here’s an example of a simple question: What are the average yards to go for NFL teams in 2018? I can get that answer with some simple T-SQL:

This is great! I was able to take my data, ask a question, and get an answer. What could be better, right?

Of course, now I have more questions about my data. And here’s the first issue you will discover when trying to analyze data stored in a traditional relational database.

T-SQL is excellent at answering one question at a time, but not as great when you need more than one question answered.

So, if we have more questions, we will need to write more queries.

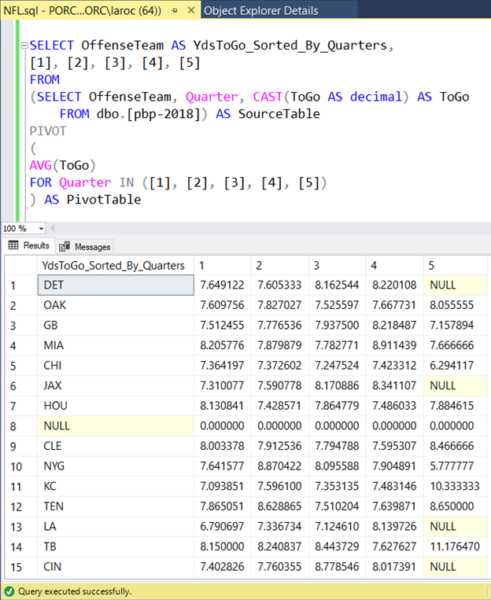

Here’s a good follow-up question that we might want to be answered: Can we examine this data broken down by each quarter?

Fortunately, the answer is yes, because T-SQL comes with a bunch of statements and functions that will help. In this case, I am going to use the PIVOT operator, as follows:

Easy, right?

No, not easy.

And not readable, either. What’s with that row saying NULL? Why do I not have a result for some teams in that last column?

As it turns out, you need a lot of experience writing T-SQL to get to that query. And you need more experience understanding the result set, too. You don’t start on Day 0 as a data professional writing PIVOT queries against a SQL Server database.

Here’s the good news: You don’t need to write PIVOT queries, ever.

Data Mining for the Masses

The data import from NFL Savant was in the form of a CSV file, which I then imported into my database. Because that’s how ETL is done (see above for the common data flow process).

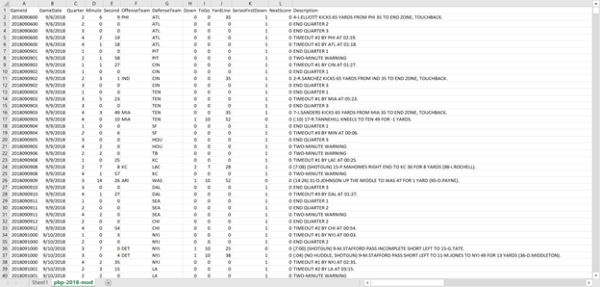

What if…now hear me out…we skipped step 2? Forget about doing the import process. Instead, let’s open that CSV file in Excel.

Here’s what it would look like:

Back to our football questions. We’ve seen examples in T-SQL, let’s look at how to do this in Excel using a Pivot table.

I click on one cell in Excel, insert a pivot table, drag the offense teams as a row, and the downs to go as a value, change it to an average, and we are done. Have a look:

It took but a few seconds to get this magic to happen. Here’s what I want you to know:

1. No T-SQL is necessary. None. Not one line of code.

2. I have the entire table as a pivot table, allowing me to answer more questions WITHOUT needing to write more T-SQL.

3. There is no code. None.

Let’s say that I want to know the yards to go broken down by quarter. With T-SQL, I would need to write a new query. With the pivot table, it’s a simple drag and drop, like this:

Fin.

There is no need to rewrite code to get this result. Because there is no code, it’s drag and drop, and then I have my answer.

And that’s why I believe the inclusion of pivot table inside Excel is the greatest advancement in the 21st century for data professionals.

Fight me.

Summary

I did not come here to bury relational databases. I came here to help you understand relational databases may not be the right place to do analytical processing.

When it comes to curating and consuming data, I have three simple rules for you to follow:

Rule #1 – Only collect data that you need. Don’t collect data “just in case you may need it later.” The data you collect must be relevant for your needs right now.

Rule #2 – Understand that all data is dirty. You could build a perfect analytical solution but based on inaccurate data. Know the risks involved in making business decisions based on dirty data.

Rule #3 – Before you collect any data, consider where the data will be processed. Don’t just assume that your database will do everything you need. Take time to list out all the available tools and systems at your disposal. The result may be a simpler solution than first imagined.

I wrote this post to help you understand Rule #3. Analysis of NFL play by play data is best done in a tool such as Excel, or PowerBI, and not (necessarily) inside of SQL Server.

SQL Server is a robust relational database engine, containing integrations with data science-y stuff such as R and Python. Just because you could do your analysis inside the SQL Server engine doesn’t mean you should.

This post originally appeared on PowerPivotPro and I was reminded about its existence while talking with Rob Collie during our Raw Data podcast. I asked Rob if I could repost here. He said yes. True story.