The internet provides everyone the ability to access data at any time, for any need. Unfortunately, it does not help guarantee that the data is valid, or clean.

In the past year I have earned certifications in three areas: Data Science, Big Data, and Artificial Intelligence. Those studies have provided me the opportunity to explore the world of data that exists. For example, Kaggle is a great source of data. They also offer competitions, if you are the type of person that enjoys money.

Today I want to spend time showing you an example of dirty data. Years ago, Rob Collie showed us that UFO pilots are attracted to LSD, but prefer ecstasy. As a follow up, let’s look at the National UFO Reporting Center Online Database. Or, as I like to call it, “what happens when you allow stoners to perform data entry”.

Let’s get started.

Dirty Data Example

The National UFO Reporting Center Online Database can be found at: http://www.nuforc.org/webreports.html

Navigate to that page, and once there click on the ‘Index by STATE’ link. Now notice at the top of the page there is a link for ‘UNSPECIFIED/INTERNATIONAL’.

OK, so let’s pause here for a minute. Judging by the word STATE and the list of US states and Canadian provinces, I assume this database has a North American focus. But there are more than 8,000 sightings listed as ‘UNSPECIFIED/INTERNATIONAL’. This doesn’t seem right to me, and I am now curious to know where the majority of these sightings are taking place. So, let’s download some data and get it into a map inside of PowerBI.

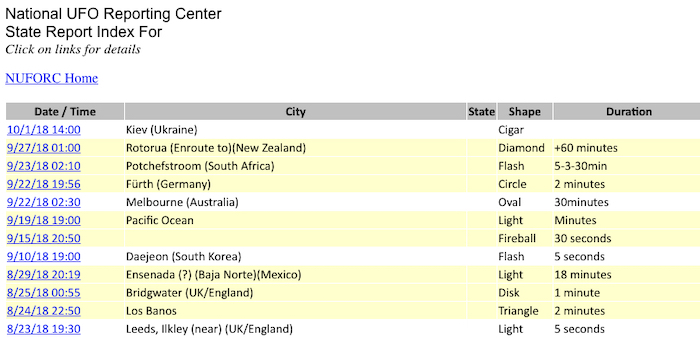

First, let’s examine that data by clicking the link. I want to see what the data looks like, and here is what I find:

Then, using Excel we import the data, using the ‘From Web’ option in the Data tab:

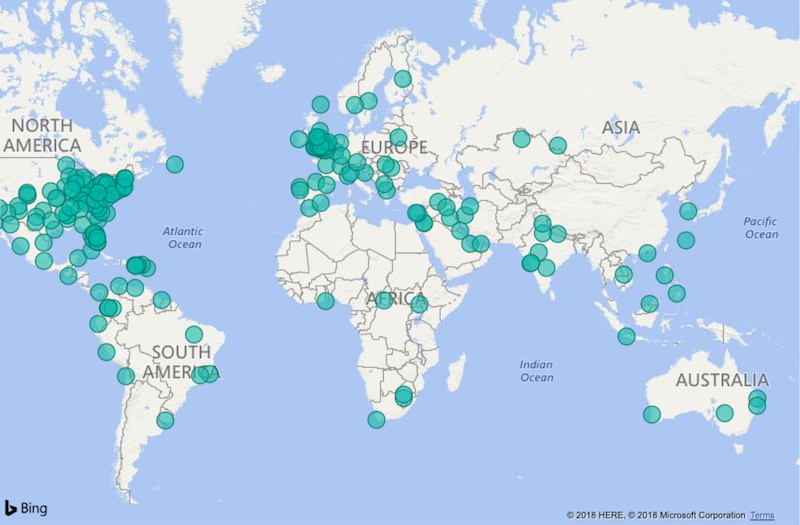

This downloads the data into Excel, and I will save the data as a CSV file. I will then import the CSV file into PowerBI. After the data is loaded I will create a map:

So far this has taken me less than 10 minutes to download roughly 8,000 rows, import those rows into PowerBI, generate this map, and see…I see…

Look at those bubbles inside the USA. I suppose those are “international” to someone not in the USA. But it is clear there is a disconnect in how this website is expecting data to be entered, and how the users are entering data. Alcohol is likely a factor, I’m certain. But it’s clear to me that we have dirty data.

Just look at the first row. The entry says ‘Kiev (Ukraine)’. That’s two different labels (City, Country) in one field (i.e., column). This could explain why the database classifies this entry as unspecified.

The PowerBI map made it easy for me to visualize the dirty data. But you won’t be working with location data on every project. You’ll need to find different ways to determine if your data is dirty.

[SPOILER ALERT]: All data is dirty.

Data Has Always Been Dirty

There’s a series of videos from Dr. Richard Hammond, taken from lectures in 1995. I believe these videos should be required viewing for every data professional. There’s one video, in particular, that I’d suggest you watch, as it is related to the topic today. The video title is “Unreliable Data“, and it is Dr. Hammond delivering a no-nonsense lecture recalling his experiences with data over many decades.

[Side note – this is a wonderful example of how you can deliver a great presentation without needing fancy slides, pictures of cats, or code demos.]

Dr. Hammond has some wonderful insights to share in the video. Have a look:

Watch and listen to the wisdom Dr. Hammond shares with the class. Here’s the TL:DR summary for you:

There is never time to do it right, but somehow you think there will be time to fix it later.

OK, so where does all this dirty data come from?

The Origins of Dirty Data

The title of this post is a quote from my friend Oz du Soleil (website | @ozexcel). It came up in conversation one night at the Microsoft MVP Summit many years ago. For a data professional like myself, it’s one of those phrases that just sticks in your head and never leaves. Mostly because I spend lots of time cleaning data for various projects.

Your data gets dirty through a variety of ways. Here’s but a few examples:

Duplicate data – A single event is recorded and entered twice into your dataset.

Missing data – Fields that should contain values, do not.

Invalid data – Information not entered correctly, or not maintained.

Bad data – Typos, Transpositions, variations in spelling, or formatting (say hello to unicode!)

Inappropriate data – Data entered in the wrong field.

As Dr. Hammond suggests, it’s difficult to determine if data is ever clean. Even scientific constants have a degree of accuracy. They are “good enough”, but not perfect.

Data’s ultimate purpose is to drive decisions. Bad data means bad decisions.

As a data professional it is up to us to help keep data “good enough” for use by others. We have to think of ourselves as data janitors.

But nobody goes to school to become a data janitor. Let’s talk about options for cleaning dirty data.

Data Cleaning Techniques

Here’s a handful of techniques that you should consider when working with data. Remember, all data is dirty, you won’t be able to make it perfect. Your focus should be making it “good enough” to pass along to the next person.

The first thing you should do when working with a dataset is to examine the data. Ask yourself “does this data make sense“? That’s what we did in the example above. We looked at the first few rows of data and found that both the city and country listed inside of one column.

Then, before you do anything else, make a copy, or backup, of your data before you begin to make the smallest change. I cannot stress this enough.

OK, so we’ve examined the data to see if it makes sense, and we have a copy. Here’s a few data cleaning techniques.

Identify and remove duplicate data – Tools such as Excel and PowerBI make this easy. Of course, you’ll need to know if the data is duplicated, or two independent observations. For relational databases we often use primary keys as a way to enforce this uniqueness of the records. But such constraints aren’t available for every system that is logging data.

Remove data that doesn’t fit – Data entered that doesn’t help you answer the question you are asking. In our example, if I want North America sightings, I would remove all entries logged as outside North America.

Identify and fix issues with spelling, etc. – There’s lots of ways to manipulate strings to help get your data formatted and looking pretty. For example, you could use the TRIM function to remove spaces from the text in a column, then sort the data and look for things like capitalization and spelling. There’s also regional terms, like calling a sugary beverage “pop” or “soda”.

Normalize data – Set a standard for the data. If the data is a number, make sure it is a number, not text. If it is categorical, make sure it has entries that apply for that category. Spelling, capitalization, etc., are all ways to set standards and normalize data to some degree.

Remove outliers – But only when it makes sense to do so! If the outlier was due to poor collection, then it could be safe to remove. Dr. Hammond suggested that “for 90% of the time, the next independent measurement will be outside the 90% confidence interval”. I trust his judgement here, so be mindful that outliers are innocent until proven guilty.

Fix missing data – This gets…tricky. You have two options here. Either you remove the record, or you update the missing value. Yes, this is how we get faux null values. For categorical data I suggest you set the data to the word ‘missing’. For numerical data, set the value to 0, or to the average of the field. I avoid using faux nulls for any data, unless it makes sense to note the absence of information collected. Your mileage may vary.

Summary

Life is dirty. So’s your data. Get used to it.

I encourage you to work with datasets, like the ones at Kaggle. Then walk through the techniques I discussed here. Ask yourself if the data makes sense. Think about what you might do to make the data cleaner, if necessary.

Get familiar with tools like Python, Excel, and PowerBI and how they can help you with data cleaning.

And remember that no matter how much you scrub that data, it will never be clean, but it will be good enough.

WOW! That stuck with you?

YUP! Data is dirty. The question is: how clean is “clean enough.”

Yes, it did stick with me. You are a wise man. I’m lucky that our paths crossed as we walk this Earth.

Great video. Real world examples for IT and business to get a grasp of the difficulties we face.

Yeah, the entire playlist is gold, IMO. It should be required viewing for anyone entering into IT, in any role.

Be careful of data cleaning without consulting the business. For example: We have found that we were using the wrong key fields and that the data was right. We had cleansed data and introduced more dirty data.

Yes, excellent point, thanks for the comment!