Seriously? You did what now?

OK, I’m sure it’s all shiny and new and takes up less space in your data center. That sounds wonderful, truly.

But did you try anything else before agreeing to spend money like a sailor on shore leave? I’m here today to help you understand your performance tuning options before you decide to throw hardware at the problem.

Well, I’m here today to help you understand your performance tuning options before you decide to throw hardware at the problem.

Allow me to present to you, dear reader, the following three facts:

FACT #1: CPUs are faster now than ever before.

FACT #2: SSDs are replacing spinning disks.

FACT #3: You can have database servers with TB(!) of RAM.

And yet people still suffer from poor database performance!

Why?

Well, great database performance starts with great database design. Even the best possible hardware can be brought to its knees by bad code and bad design.

For every database platform in the world there exists a finite number of bottlenecks: disk I/O, memory, CPU, network, locking/blocking. I don’t care what database platform you have or if you are running on Linux, Unix, or Windows. Those are your constraints.

Which one of those is a result of code, design, and data distribution?

Many times we are forced to throw hardware at the problem because we can’t touch code. This is especially true for 3rd party (i.e., vendor) products that we are asked to install and administer. But hardware has its limits, even today.

If you get 100 data professionals in a room (promise them bacon and they will come, trust me on this) they will argue with each other as to which resource bottleneck is the cause of a majority of their problems. Some will say memory, others CPU, and others will swear it is disk I/O. Very few will blame the network (although I usually start there by default in a half-joking manner). They will all want to throw hardware at the problem, very few will look to dig in and examine the database design along with code.

How To Identify Performance Tuning Opportunities

I’ve written before about the things you don’t want to do when designing a database. But what about for systems that you have inherited? Assuming you have exhausted your hardware enhancements, what can you do then?

Here’s a few performance tuning tips for you to consider:

- You can take the time to examine your plan cache, looking for ways to improve performance (including finding missing indexes).

- You can examine existing indexes to remove duplicates, as duplicate indexes can be a drain on performance.

- Since we are talking about indexes, it wouldn’t hurt to make certain your statistics are up to date.

- Review the placement of data, log, and backup files for your server. Many times SQL installations are done by accepting all defaults, which can result in everything ending up on the same drive.

- Examine the memory configuration for the server and the instance of SQL.

- Check the power plan settings for your server.

- Examine the server to see if other applications are installed, as they consume resources too.

- If your server is running on VMWare, then you may want to use your favorite monitoring tool to correlate query performance to the performance in your virtual environment.

- Review to make certain you have optimized tempdb for performance.

- Lastly, you may not need all your data! Review your archiving strategy (if it exists), or consider deploying a partitioning strategy.

The above list is not meant to be comprehensive, just as a starting point for where you can possibly find some easy tuning opportunities.

Summary

The next time you throw hardware at a problem and your performance is still dismal, come back here and try a few of the items listed above.

Or, think about trying them BEFORE someone talks you into spending thousands of dollars on hardware. If it turns out that you end up saving some money as a result please keep in mind my fondness for wine and bacon.

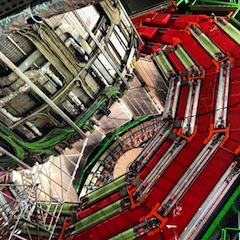

Then again, “throwing money at the problem” seems to be an idea that has deep roots in our history:

Dude, why all that? Just use NOLOCK or READ UNCOMMITTED everywhere and get it over with, it’s the Turbo button! I don’t even need a MCM Cert to know that! For those who don’t know me well, I’m being sarcastic by the way.

Nice post. I would have added two small things. 1. Check Auto-Close and Auto-Shrink are OFF maybe put a policy on to do that for you. 2. Storage array is setup properly (ex 64KB Clusters recommended by MS for example, not everything on one SAN). My two cents.

Thanks!

Another commonly overlooked performance boost can come from data compression (page/row) or the new in memory tables to reduce IO contention.

Andrew,

Agreed, those are two additional options to consider.

Nice photo.

Like that? I got it from that one time I didn’t visit CERN.