Data modeling is dead. It is a product of an era that has passed; that of corporate silos that created their own versions of software to suit their own needs.

Data modeling is dead. It is a product of an era that has passed; that of corporate silos that created their own versions of software to suit their own needs.

That is no longer the world in which we live. That era was one that had high costs associated with building and maintaining a database of customers.

Today’s era is one where you can subscribe to Salesforce.com for just a few dollars a day. You can decide for yourself to run a new report. How much did that same report cost in the old era? How long would it take for IT to deliver that report? That’s why businesses today are using such services, because it reduces time and costs.

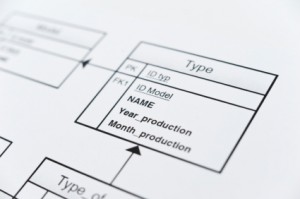

Recently Karen López (blog | @datachick) wrote an article gives a good background on the differences between conceptual, logical, and physical data modeling. The TL;DR version is here: Conceptual modeling is when the business helps to map out their needs at a strategic level. Logical modeling is when a data architect gets involved and describes the business data requirements independently of the DBMS, uses, or organizational constraints of the data. Physical modeling is when a data architect and a DBA ensure that the logical model will meet the business needs for things like performance and recovery. A physical model is designed for a specific version of a DBMS or other data store.

On paper and in classrooms these mythological ideals sound great. In the non-academic world database modeling is not done as much as you might think. With the advent of cloud services such as Azure I can build and deploy applications without ever needing to have one database design or modeling session.

Here are the reasons why modeling doesn’t matter for most of us anymore.

Third Party Software Packages

We’ve all been there: someone on the business side gets a phone call from a salesman for an invite to a three-martini lunch. By the time lunch is over the business has decided that the salesman’s product is *THE* product that your business must have in order to do…well, it doesn’t matter what it promises to do, really. The point here is that your business decides to purchase some software that has a database backend. If you are lucky it is a database platform you can support, but that is not always the case. But hey, a database is a database, right? [Despite our shop being a dedicated Microsoft shop I once had a manager demand to know “how much longer” it would take for my team to support Oracle, because we were holding the business back, apparently.]

This software come in house, get’s installed, and guess what? All of your best practices and policies get thrown out the window. You make exceptions for every rule because this software requires ‘sa’ access, it needs a dedicated server, and you aren’t allowed to make any schema changes (not even additional indexes) in order to improve performance (I’m looking at you, Sharepoint).

While it is a recommended best practice to do logical and physical modeling together those tasks are not possible when purchasing a third party product. More than three-quarters of the apps supported by my customers right now are from third party vendors. Very little in house development is being done. That means they get the conceptual, logical, and physical model that was built for them by somebody else. As a DBA, you won’t even be able to touch anything, either.

You’re stuck with a pickup truck and your business expected a Ferrari.

Software-as-a-service (SaaS)

This is the same thing as above except that you don’t host the system. The DBA doesn’t have any input into…anything! Of course the DBA will get blamed for anything that goes wrong, but that’s a topic for a different blog post.

With SaaS you get someone else’s conceptual, logical, and physical design, and you simply hope it will be good enough for your needs most of the time. Salesforce is the prime example here. Your business does need not purchase and host their own customer relationship management system these days, they can just pay for the service. They save money, and time because this also means you don’t need to spend time in those pesky database design or modeling meetings.

Data-as-a-service (DaaS)

This is just like above but you don’t do anything except pull in data feeds. Now, once you pull in the feeds you might be thinking “hey, we’ll need to store that data”, which would mean the need for some actual modeling. But the reality is that it won’t get stored inside of a traditional database. It is much more likely to be stored, inside a spreadsheet, inside of another application such as Sharepoint. Don’t pretend as if you’ve never seen this before. If you haven’t, then just wait your turn, you will see it soon enough.

Here’s the crazy part: as much as the need for data modeling seems to be diminishing these days I also see that it is more important now than ever before. All of those companies that are providing SaaS and DaaS need to have data modelers on staff that can ensure their services can be consumed and shared easily. That stuff cannot just happen by magic. As easily as I can deploy an application to Azure without needing to know anything about modeling my application will not scale beyond a certain point without a proper database model and design. User needs must be anticipated, logical models are needed to ensure data quality, and physical designs are needed for performance and recovery. Companies run a huge risk by not having these.

Every time I meet with a customer that says “we can’t touch the app” I feel their pain. I know that our careers as data professionals are heading into the Cloud, and that often times our hands are tied. But as long as people are still building applications, as long as data needs to get shared between two endpoints, then there is always going to be the need for someone to understand how best to keep that data organized.

That’s where the data modeler, or architect, is needed most.

Even today.

Packaged applications are generic, invariably subject to some degree of in-house customization, which involves development and maintenance. Many packaged applications combine transaction processing and decision support functions against a single physical database. Performance and scalability limitations often result in the creation of separate databases, ODS or DM environments. However, the big value of doing data modeling is reducing the cost of system maintenance in your organization right now. By accurately capturing business data requirements during the design phase, thus reducing time and cost associated with error correction and enhancements during the maintenance phase of the system lifecycle.

Data modeling is an essential foundation for best implementation approach to design a database that will satisfy business requirements for years to come.

Thanks for the comment, and I agree that there are benefits.

However, most CIOs are all about reducing their total cost of ownership, and data modelers are just an expense these days when you can just purchase vendor applications.

Just purchasing vendor applications is sorta like just purchasing a Sawzall and some new beams. It just works, right?

If companies would just ask to inspect the data model of system prior to purchasing it would be shocking at how badly designed most are. I think things may go full circle when companies realize what a bad idea it has been to have expensive jalopy systems badly integrated with each other. Then worse to give/share your lifeblood data with Cloud providers. Not even knowing the folks holding, managing, using your data is beyond me. Like giving your gold to someone to lock away from you on their terms and not even giving you a key. I don’t think money saved in the long run and more crazy is the intelligence lost. But catchy name, Cloud….so many think it is something ethereal. Have you been on the receiving end of data coming from SFDC and the non validation and nasty data that may flow?

Robin,

I wish I could agree to this, but I can’t. I’ve never seen a company turn down a software package because of bad design. With IT being in a subservient role to the business, if the business says the software is a “must” then it gets purchased and IT has to support it. If that means higher costs, so be it, that’s the cost of doing business.

It is incredibly wasteful, in many ways.

So far I have never seen person/groups shopping software ask to examine the design. Nor do I think that the shoppers would even comprehend design because most seem to be non or superficially technical in the area of data base design. I do, like you, see IT in subservient position to support what has been purchased. And the IT group managers are usually very grateful to get the support money.

My experience has been that the software gets installed as a test or a proof of concept. Once we see how it performs, examine the design, etc., we provide feedback. By then, however, it would seem the deal has been sealed. No matter how dreadful the software may be, the business always gets their way and IT is forced to support something undesirable.

So you purchase a bunch of applications or services bundled with data, sure. But then you have to use these together. You need federation, for which you need modeling. If your purchased applications come with models that reduces your integration time & cost. It just moves the problem around.

There are two sides to this story,

Packaged Application – Development and Maintanance and the Role of a “Data

Modeler”

Yes, there have been packaged

applications for almost everything, but people still need to develop, extend

and maintain it; is the first side. Packaged Application, as either a Client

Server or a Cloud model needs to be maintened. And for maintenance the data

model will have to be tweeked and to do so, we need an understand of the model

as well as the knowledge of the functionality to be implemented, so modeling is

there for real

The flip part of this is, irrespective

where the data is, cloud, client/server for a packaged or homegrown

applications, that data needs to be analyzed to the nth level and this can only

be done in a warehouse, data mart, reporting datamart, which can be traditional

or in big data stores. These warehouses and marts need to be designed and tuned

so that data can be made available in the easiest and simplest form for

analysis. So here again the need for a data modeling effort does not diminish. That’s

the reason, for the past year or two the need for Big Data exeprienced

“Data Modelers / Architects” has increased.

The second side is the conventional

role of a “Data Modeler”, which is insufficient for an organization

today, they are looking for more advice and output from this role. The need

today for a Data Modeler is to do modeling, data integration ( various sources

interacting with an application or warehouse), Data Strategy, Data Stewardas

well as having a very good grip over the Integration Architecture and

Enterprise Architecture of a company.

So my belief is, at all levels

developing and/or maintaining an application (conventional and/or cloud based),

data warehouse of any flavor (canventional and /or big data-based), the need

for a Data Modeler and Data Architect has not diminished.

I’ve heard customers say ‘our ERP is like a Black Box’ so many times. Normally this means they don’t really understand the ERP ‘internals’. And that’s not necessarily a problem if everything in the organization is being handled by the ERP. But in my experience, that’s pretty rare. Whilst the ERP might have been implemented as a replacement for a bunch of legacy systems, it often ends up having to coexist with lots of other applications. Or there is a need to get data out of teh ERP for some kind of BI strategy.

Either way, you need to answer the kind of questions that a good data model can help with.

And then the challenge is – how do I understand what’s in the ERP from a data perspective?

I’m starting to think that the crux of the discussion comes down to this: people want things to be fast and easy. Taking the time to do a proper data model gets in the way of “fast”, and for most it isn’t easy, either. And even harder if you don’t do it right up front.

So many times I’ve seen something new come along that claims to be faster and easier. More often than not they prove to be neither, and end up leaving a bigger mess to clean up than when they started.

I’ve written some more, now..http://www.datamodel.com/index.php/2016/02/16/is-logical-data-modeling-dead/

It seems this debate doesn’t end…

Thomas, very nice summary of the whole question of “data modeling”.

May I add a comment, in response to your reference to Salesforce, which is oddly enough so incredibly successful as a sales platform. But as a professional B2B sales person who also has some knowledge of software and data modeling, I see that Salesforce isn’t as good as it could be. And having worked with some folks who were in a position to know, apparently some of this limitation, it is claimed, is due to the data model. If senior business executives aren’t interested in knowing what goes on “inside the black box” of technology, then demand for good technology is reduced, and you “get what you get”. (Any review of sales people’s comments on Salesforce, which you can find in multiple locations, will give you a taste of the frustration that people have with this software.)

You make the broader point that data modeling requirements inside the corporate are reduced because ERP and other packaged applications deliver pre-built or implicit data models (and process models too). And no one ever rejects an application because of “a poor data model”. I think they should — at least by proxy, by which I mean that purchasing executives should understand how any prospective software product supports a given business domain. And in making such an investigation, the data model needs to be evaluated. Why? Because the data model is the single most important technological leverage point for determining “what the application can say, and do”. The data model determines the “statements that can be made by your software”.

If you want to be able to say “accounts receiveable”, then you have to have that as an entity in your system. And data modeling, more specifically relational technology, is the only scientific foundation that makes that possible. Everything else is the modern version of the GoTo statement, or in today’s terms, just coding. Objects are just coding. And coding is subject to the laws of complexity and entropy. And after a while, you find yourself in a brittle, complex environment.

Here’s another example. “Evernote” is an extremely popular notetaking tool, and cloud-enabled. It’s very good. They have also published their data model, if I understand what I’ve seen correctly. Interestingly enough the Evernote data model is extremely, even shockingly, simple. And one thing I note (and I stand to be corrected) is that in Evernote there is not entity that could be used to support “narrative” or “story”, which could be considered a “linked chain of notes”. And for this reason, Evernote has not sense of narrative or story — all notes can be tagged, which results in “buckets”, of the unordered kind, although perhaps viewable in a sort by data. This is an example of software where something of interest to notetakers seems to be excluded from functionality by virtue of the data model. You could code around this; a new set of tables would be the best solution.

Economically there’s no sense in everyone building their own applications, and certainly all companies share some common practices, which can be delivered in common software. But that doesn’t take away from the fact that executives have a responsibility to understand that their business freedom may be constrained — in ways that they would definitely not want — if there is no sense of “owning and understanding and taking responsibility for” the platform of business semantics which is first of all defined by a data model.

Keep on modeling!

John,

Thanks so much for the comment and feedback, much appreciated!

Does it mean that the data modeling tools like ERwin, ER/studio are also dead or going to die soon. I think as long as we store data for an enterprise, we need to structure them, no matter when and how.

The tools aren’t dying, no. But there is a shift from companies storing their own data versus paying for a platform. I give some examples above, such as Salesforce. Why build your own CRM, or host some 3rd party package yourself, when you can just pay for a service?

I’m rather late coming to this party, but the content and sentiments really chime with my experience.

Who develops big systems from scratch any more? Most companies buy a package and then extend it (if necessary) to fit their requirements.

Problem there is that the package rarely replaces all legacy, so they end up having to coexist with the package. And there is still a need to get data out into some kind of BI world, even if its in a package.

These are classic jobs for the data modeller:

1) I need to interface XYZ package with my home-grown Widget system. How do I understand the data structure of both so I can see what the fit is

2) I need to pull data out of the ‘black box’ package to put into my Warehouse – where do I find what I need?

In the old days, the data model of each system was printed out and stuck to the wall in the DBA’s office. Try doing that with a SAP system that has 90,000 tables 🙂

Of course every BI/ETL tool under the sun purports to have an interface into these packages, but mostly they are just ‘pipes’ for siphoning off data. You still have to work out ‘which’ data.

The data modeller should be king (or queen) in this world, but as a profession, we are not good at blowing our own trumpets to let people know how we can help.

Nick,

Great comments, thanks for sharing.

Funny, but the other day I found myself thinking about this post, and then today you left a comment! We *might* be sharing some brain bandwidth, I don’t know.

My thoughts were how the current trends in tech are removing hardware as a bottleneck for applications and systems, and how data professionals need to know more about how data moves through the enterprise and less about racking servers. Data modeling is one of those areas where such expertise already sits, and I think they are well poised to have a role in the enterprise for years to come.

Thanks!

Hi Thomas

My ‘pet’ subject is ERP metadata. But the general problem of ‘source data discovery’ is equally applicable to big ERP and CRM packages, and to homegrown systems. Our industry is very well tooled up for every aspect of data manipulation (modelling, ETL, BI…..) but the missing bit is data discovery. How to locate the data that I need for my Information Management project.

As you say data modellers are well placed to address this and I dream of the data modeller stepping into a phone booth, and re-emerging as ‘Data Discovery Man’ – see how he locates that Parent-Child relationships!

The barrier that I see to this is the perception many have of the stereotype data modeller. There are a good number of our band that are overly concerned with the niceties of Third Normal Form and what constitutes a Conceptual rather than a Logical model. Many in the wider BI world will look upon the data modeller as a barrier to getting the project done, rather than as a help. IMHO, that’s something we have to change.

I *love* the idea of “Data Discovery Man” so much, I’m going to pick that as my Halloween costume this year.

Locating data is indeed a difficult issue. But one area that I see data modelers helping with is knowing the right questions to ask. Because without the right questions, you will never know if you have the right data.

If data modelers get better at asking the right questions, and helping to locate data, then they will be able to easily step into the New World that is coming.