That’s what you have inside your database, I’m certain of it.

I am also certain that you can take steps to avoid having large amounts of empty space inside of your database. The first step, as always, is admitting you have a problem. In this case that means you need to know how to look for the problem.

That’s what I’m here to do for you today. Let’s go look for some empty space together, shall we?

The Problem

Here’s the problem: SQL Server stores data in 8k pages. OK, so that’s not the problem, really.

The problem is when you have row sizes that are slightly more than 4k in length, but far less than 8k. That’s the wasted space, and it’s what I’m here to help you with today. Every time you insert a row that is 5k in size, you take up a full data page on disk. Imagine, if you will, having 100,000 rows in a table, each one at 5k in length. How much space will those 100,000 rows consume on disk? Ideally you would like for it to be close to 500Mb, right? Well it will be closer to 800Mb. That extra 300Mb? Yeah, just empty space. It’s allocated, but not used. And every time you run a query against that table you may need to pull back roughly 100,000 logical I/O (if you needed each row, and each row was on its own data page).

Imagine if you could get two rows of data onto a page. Then the same 100,000 rows would only need 50,000 pages, which is less than 400Mb in space, and more importantly it would only be (at most) 50,000 logical I/O. You may have just cut your query time in half!

We have a handful of ways to find out the size of a row, but here’s the method I prefer: the simplest.

The simplest way for me to find out if the rows are larger than 4k is to take the size of a table and divide by the number of rows. I said “simplest”, but that doesn’t necessarily mean “best”. But for those of you still running SQL2000 then that is probably the easiest thing to do. Good luck with that.

For those of us here in the 21st century we can use the dm_db_index_physical_stats system function in order to get details about the average record size. For example, this script would give us some details for the HumanResources.JobCandidate table in AdventureWorks2012:

SELECT avg_record_size_in_bytes

, avg_page_space_used_in_percent

, OBJECT_NAME(object_id) AS [ObjectName]

FROM sys.dm_db_index_physical_stats(db_id(), object_id('HumanResources.JobCandidate'), 1, null, 'detailed')

It would be rather tedious to run this for each and every table manually, so let’s put together something that returns details for every table in a database. We want to add some filters as well, to reduce the amount of noise. We don’t need to worry about the small stuff, like a row with an average record size of 11 bytes.

We are not concerned with non-clustered indexes in this example, we will want to focus on heaps and clustered indexes. We also want to focus on tables that have a row with a maximum size greater than 4k (because we want to get at least two rows onto a page as often as possible), and we are not going to be concerned with very small tables that have few rows or few pages.

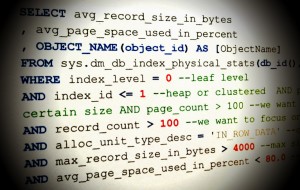

With all the being said, here’s the script to get it done for us:

SELECT avg_record_size_in_bytes

, avg_page_space_used_in_percent

, OBJECT_NAME(object_id) AS [ObjectName]

FROM sys.dm_db_index_physical_stats(db_id(), NULL, NULL, null, 'detailed')

WHERE index_level = 0 --leaf level

AND index_id <= 1 --heap or clustered

AND page_count > 10 --we want to focus on tables of certain size

AND record_count > 100 --we want to focus on tables with certain rowcounts

AND alloc_unit_type_desc = 'IN_ROW_DATA' --only in row, not LOB

AND max_record_size_in_bytes > 4000 --max size of rows greater than 4k

AND avg_page_space_used_in_percent < 80.0 --focus on pages less than 80% full

Feel free to adjust the filters as you feel necessary. You may only want to focus on tables with more than 1,000 pages, for example. I wanted to give you a script that would return a few rows from AdventureWorks2012.

OK, so now we have identified some tables to investigate, how do we fix them? Great question, and I have some suggestions for you.

1. Review your design

Take a look at that table. No, I mean LOOK AT IT!

Do you see any columns with redundant data? Is the table really wide because the data was denormalized in an effort to make reporting faster? Or is your "database" really just a former excel spreadsheet that got migrated to MS Access a dozen years ago and has since been promoted through versions of SQL Server like a star athlete through high school?

Perhaps there are two columns for email addresses, possibly leftover from a refactoring project that never got cleaned up. Yeah, go ahead and pretend like you've worked on a refactoring project that actually cleaned up the old data. I've yet to meet anyone that has seen such project through to completion, many projects don't ever get around to removing that old data.

It might be time to review the design for this database however any changes you make are likely going to be disruptive to the business and require testing, change control, etc. It may cost you more to fix than to simply leave it alone! You will need to document what the costs are in terms of disk storage and query performance. If you can show that you can save money in the short term by making some changes then you are likely to get traction for a design review.

It is also possible you are working with vendor products that cannot be changed. Let's assume that you can make changes and therefore you need to go and talk to your development team and discuss more about the business requirements. Get up off your arse and do it, go and ask the questions. You may be surprised to find people willing to help make things better.

If you suspect that you have some column datatypes that could use some additional investigation then might I suggest you read the next section...

2. Examine columns for right-sizing

What do you see for column datatypes. Do you see a bunch of CHAR(), NCHAR(), DATETIME, and BIGINTs defined? Do you need those types, or would smaller (or variable) ones suffice? Do you have ANY idea? Probably not, and that's why I've blogged about this before, more than once actually. It isn't hard to avoid datatype mismatches. If you suspect that you have some tables in a database that could use some right-sizing then you can run my scripts in order to examine those columns a bit more closely.

This is why I have my script focus on the max size of a row as opposed to the average size of a row. I want to know if the row has a chance to be greater than 4k as that is the row I need to focus on altering first, in order to get that row to have a max of less than 4k, if possible. Does this guarantee page fullness? Absolutely not! It just happens to be where I am drawing a line in the sand for this particular example.

Finding columns that need adjusting then gives you a new problem to solve: trying to convince your business users that a change is needed. Many times I have heard managers simply say "let's wait for a problem to happen before we try to fix anything". If you still can't make a compelling case for change you may want to consider a third alternative...

3. Compression

You can enable row and/or page compression on the table in order to help reduce the I/O. Enabling compression will not require any of those pesky code changes that people don't want to make. It doesn't mean that you shouldn't test the effects of enabling compression, it just means that it is less intrusive than changing the datatypes for columns.

Compression can give you a boost in performance by allowing for more rows to fit onto a sibgle 8k page. However there is additional overhead with compression, and if you table has a high volume of DUI statements (that's Delete, Update, and Inserts) you may not want to think about compression at all.

In addition to the increase in performance you also get a benefit in reduced disk space. You can get an estimate of the space saved by using the sp_estimate_data_compression_savings system stored procedure. This example will give me the estimate for enabling row compression on the Sales.SalesOrderDetail table:

EXEC sp_estimate_data_compression_savings 'Sales', 'SalesOrderDetail', NULL, NULL, 'ROW' ;

GO

I've given you a way to identify if you are seeing a lot of wasted space and also three ways to help fix the issue once found.

The choice to act is yours, of course.